Challenges to the CO2 Global Warming Hypothesis: (6) The Greenhouse Effect Doesn’t Exist, Revisited

/As a further addendum to my series of posts in 2020 and 2021 on the CO2 global warming hypothesis, this post presents another challenge to the hypothesis central to the belief that humans make a substantial contribution to climate change. The hypothesis is that observed global warming – currently about 0.85 degrees Celsius (1.5 degrees Fahrenheit) since the preindustrial era – has been caused primarily by human emissions of CO2 and other greenhouse gases into the atmosphere.

The challenge, made in two papers published by Australian scientist Robert Holmes in 2017 and 2018 (here and here), purports to show that there is no greenhouse effect, a heretical claim that even global warming skeptics such as me find dubious. According to the paper’s author, greenhouses gases in the earth’s atmosphere have played essentially no role in heating the earth, either before or after human emissions of such gases began.

The papers are similar to one that I discussed in an earlier post in the series, by U.S. research scientists Ned Nikolov and Karl Zeller, who claim that planetary temperature is controlled by only two forcing variables. A forcing is a disturbance that alters climate, producing heating or cooling. The two forcings are the total solar irradiance, or total energy from the sun incident on the atmosphere, and the total atmospheric pressure at a planetary body’s surface.

In Nikolov and Zeller’s model, the radiative effects integral to the greenhouse effect are replaced by a previously unknown thermodynamic relationship between air temperature, solar heating and atmospheric pressure, analogous to compression heating of the atmosphere.

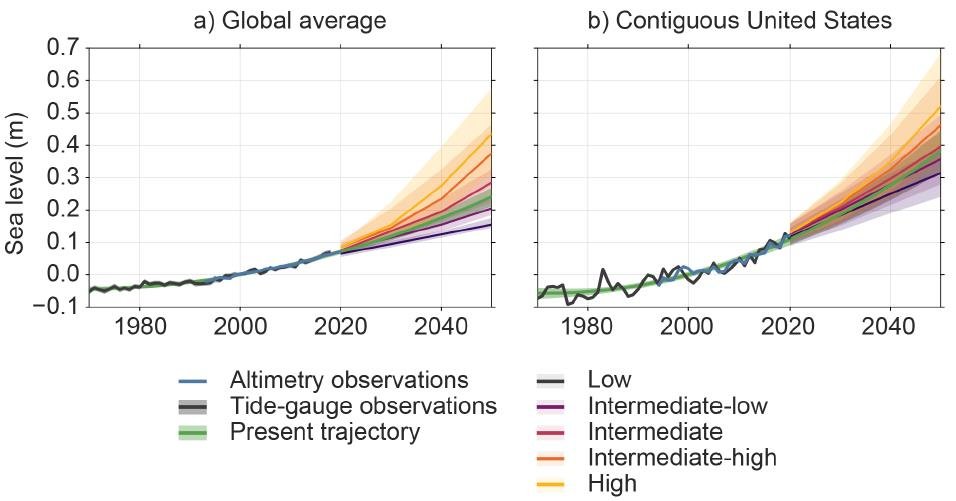

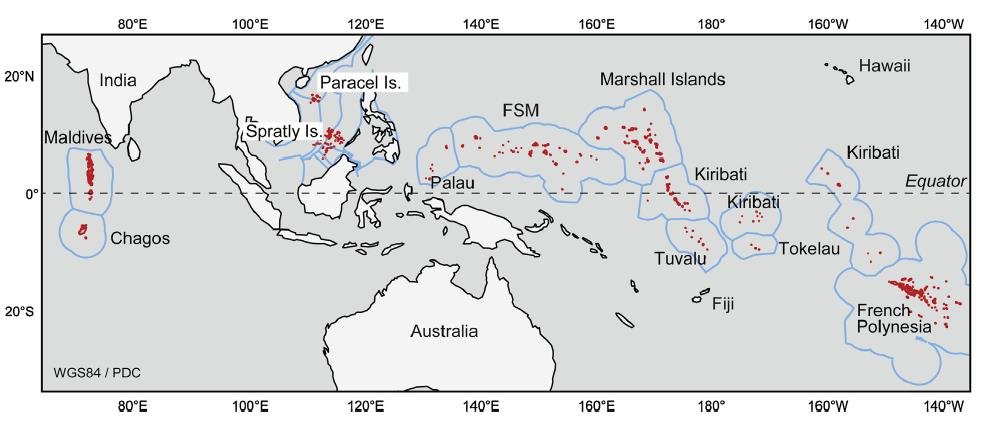

Their findings are illustrated in the figure below where the red line shows the modeled, and the circles the actually measured, mean surface temperature of the rocky planets and moons in the solar system that have atmospheres: Venus, Earth, Mars, our Moon, Titan (a moon of Saturn) and Triton (a moon of Neptune). Ts is the surface temperature and Tna the calculated temperature with no atmosphere.

Like Nikolov and Zeller, Holmes claims that the temperatures of all planets and moons with an atmosphere are determined only by solar insolation and surface atmospheric pressure, but with a twist. The twist, in the case of Earth, is that its temperature of -18.0 degrees Celsius (-0.4 degrees Fahrenheit) in the absence of an atmosphere is entirely due to heating by the sun, but the additional 33 degrees Celsius (59 degrees Fahrenheit) of warmth provided by the atmosphere comes solely from atmospheric compression heating.

Holmes argues that the extra 33 degrees Celsius (59 degrees Fahrenheit) of heating cannot be provided by the greenhouse effect. If it were, he says, planetary surface temperatures could not be accurately calculated using the ideal gas law, as Holmes shows that they can.

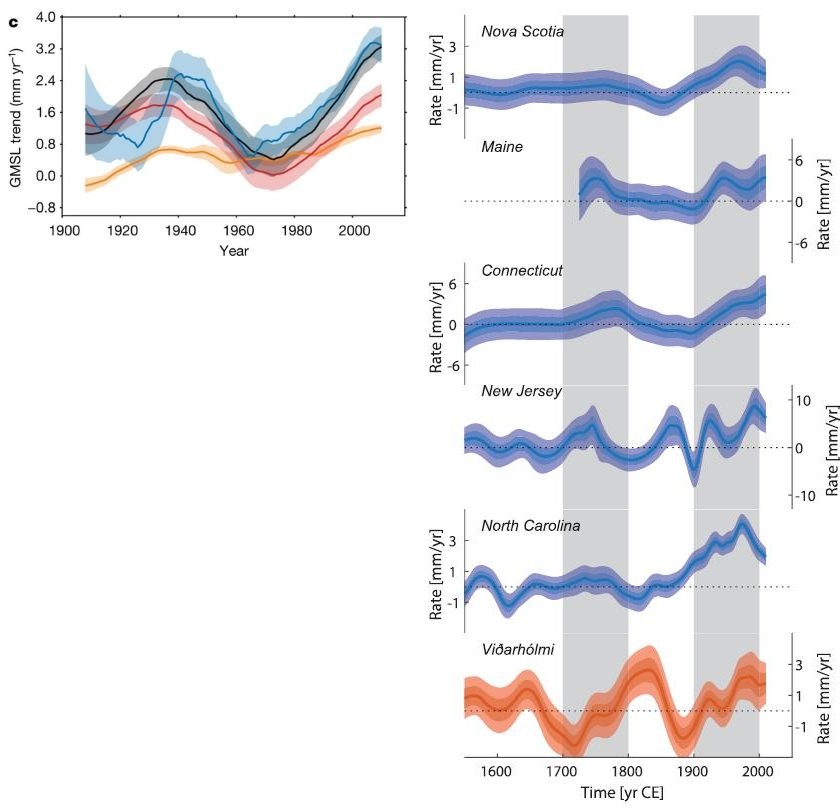

The next figure compares Holmes’ calculated temperatures for seven planets including Earth, the moon Titan and Earth’s South Pole, using the ideal gas law in the form T = PM/Rρ, where T is the near-surface temperature, M is the mean molar mass near the surface, R is the gas constant and ρ is the near-surface atmospheric density.

However, the close agreement between calculated and actual surface temperatures is not as remarkable as Holmes thinks, simply because we would expect planets and moons with an atmosphere to obey the ideal gas law.

In any case, the argument of both Holmes and Nikolov and Zeller that compression of the atmosphere can explain greenhouse heating has been invalidated by PhD meteorologist Roy Spencer. Spencer points out that, if atmospheric pressure causes the lower troposphere (the lowest layer of the atmosphere) to be warmer than the upper troposphere, then the same should be true of the stratosphere, where the pressure at the bottom of this atmospheric layer is about 100 times larger than that at the top.

Yet the bottom of the stratosphere is cooler than the top for all planets except Venus, as can be seen clearly from the following figure of Holmes. The vertical scale of decreasing pressure is equivalent to increasing altitude; the dotted horizontal line at 0.100 bar (10 kilopascals) marks the boundary between the troposphere and stratosphere.

Both of these farfetched claims that there is no greenhouse effect stem from misunderstandings about energy, as I discussed in my earlier post.

Next: Arctic Sea Ice Refuses to Disappear, despite Ever Rising Arctic Temperatures