Southern Ocean Cooling: Climate Scientists Unable to Agree on Explanation

/Contrary to the predictions of climate models, surface sea temperatures in the Southern Ocean around Antarctica have cooled for several decades. Over the last 15 months, three completely different explanations have been proposed for this global warming anomaly.

The first paper, published in November 2024 by a team of mostly Spanish researchers, postulated that the cooling comes from previously unstudied marine sulfur emissions. It’s been known for some time that sulfate aerosol particles linger in the atmosphere, reflecting incoming sunlight and also acting as condensation nuclei for the formation of reflective clouds. Both effects cause global cooling.

But what wasn’t known before is that there are two sources of marine sulfur emissions, both of which emanate from microscopic plankton that live on the ocean surface and emit aerosol-forming gaseous sulfur. The previously known sulfur source is dimethyl sulfide ((CH₃)₂S), mainly responsible for the distinctively pungent smell of seafood. Emission of the more reactive methyl sulfur hydride (CH₃SH), hitherto neglected, has been quantified for the first time by the Spanish research team.

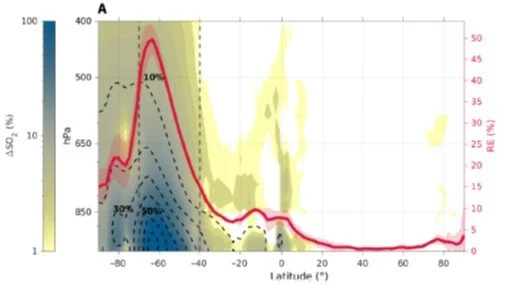

The researchers found that emissions of CH₃SH increase the formation of sulfate aerosols in the atmosphere by 30% to 70% over the Southern Ocean; this decreases incident solar radiation in summer by between 0.3 and 1.5 watts per square meter, enhancing the expected aerosol cooling effect. The maximum effect, which occurs at 65oS, is illustrated in the figure below, where the red line denotes the increase in total sulfate aerosol emission as a function of latitude.

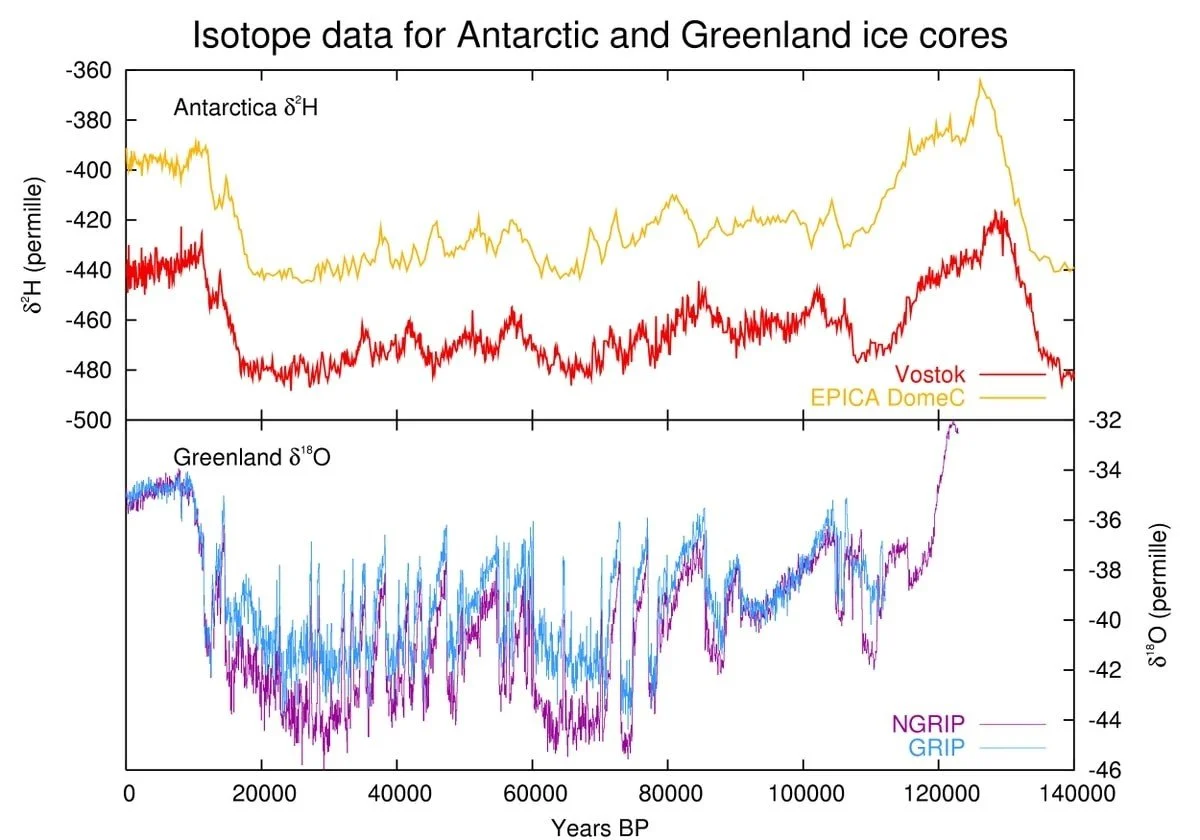

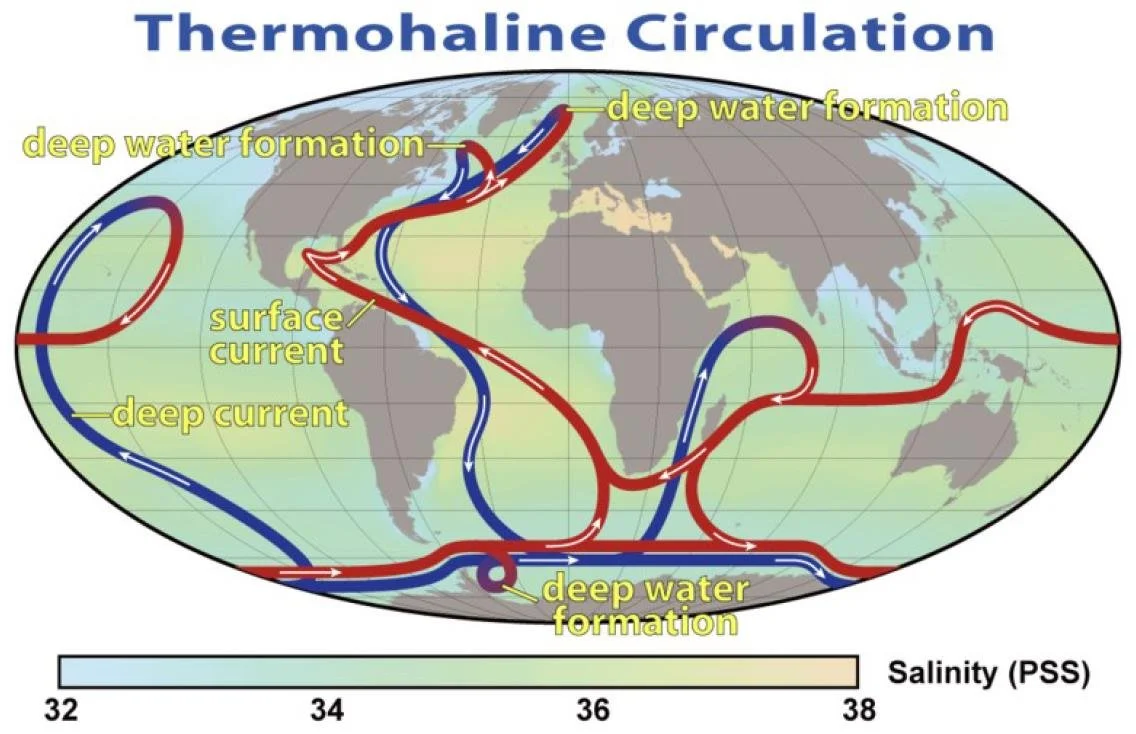

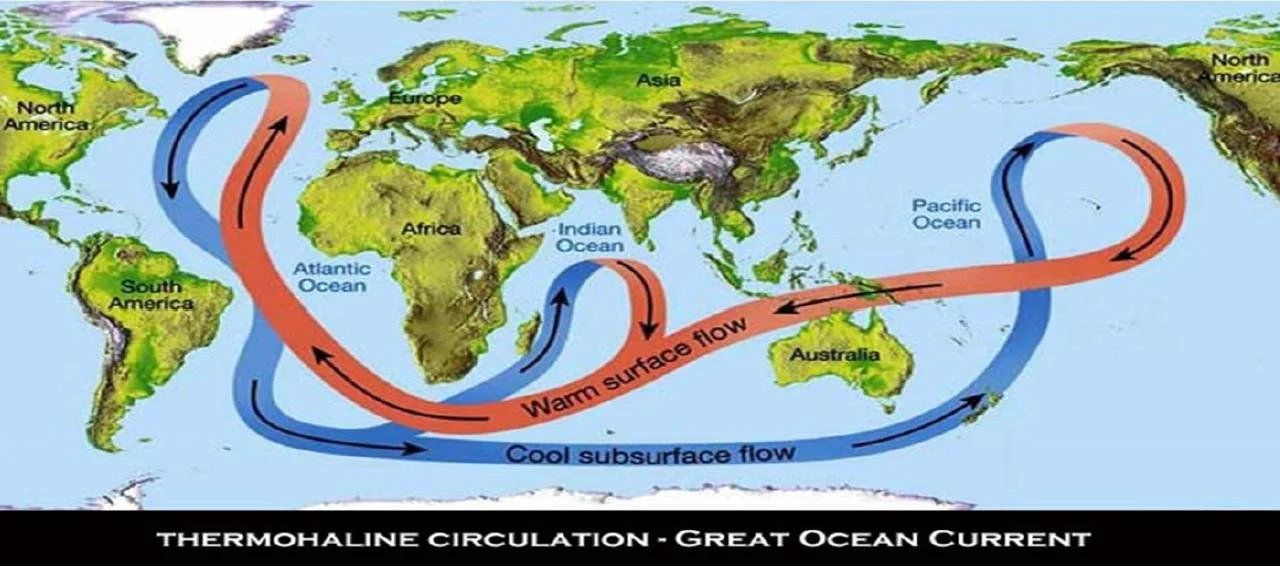

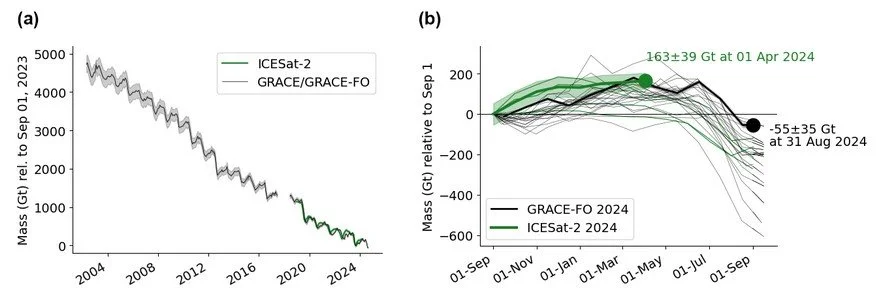

The second paper, published in March 2025, takes a rather different tack in proposing that the unexpected Southern Ocean cooling results from an influx of freshwater that’s not incorporated in state-of-the-art climate models, and restricts the exchange of surface waters with deeper warmer waters.

The authors, an international team of earth scientists, found that over the period from 1990 to 2021, underestimated freshwater fluxes can explain up to 60% of the difference between observed and modeled sea surface temperatures in the Southern Ocean. The freshwater consists of both Antarctic meltwater, which is completely missing from most climate models, and underestimated rainfall over the ocean.

To quantify the effect of freshwater on Southern Ocean sea surface temperatures, the researchers used an ensemble of 17 coupled climate and ocean models that simulate ocean density and circulation changes. Separate simulations investigated step changes in forcing caused by surface freshwater fluxes from Antarctic ice sheet melting, and from atmospheric rain combined with sea ice melting, respectively.

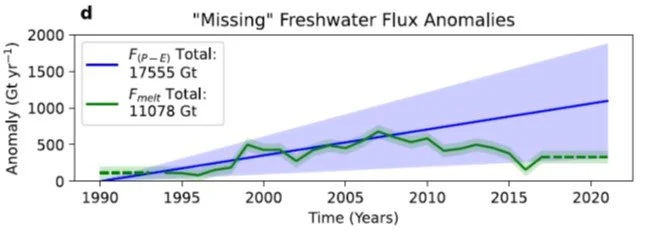

Some of their results are displayed in the next figure which shows estimates of the freshwater flux, measured in gigatonnes (Gt, where 1 gigatonne = 1.102 U.S. gigatons) per year, from 1990 to 2021. The steadily increasing rainfall (plus sea ice) contribution is represented by the blue line and shading; the green line represents the Antarctic meltwater contribution – seen to be diminishing with time.

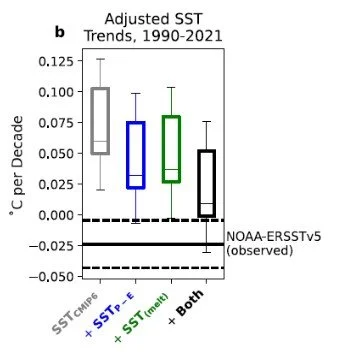

The figure to the left depicts the influence of these “missing” contributions to the rate of warming or cooling of Southern Ocean sea surface temperatures, measured in degrees Celsius per decade. The overestimated warming predicted by climate models is indicated by the gray box on the left; the calculated contributions from rainfall and meltwater by the blue and green boxes, respectively; and the combined contribution by the black box on the right.

You can see that the combined contribution brings the climate model warming rate down to almost zero. So this research provides a much better representation of the actual cooling rate than before and helps to resolve the decades-long mismatch between predicted and observed Southern Ocean temperatures.

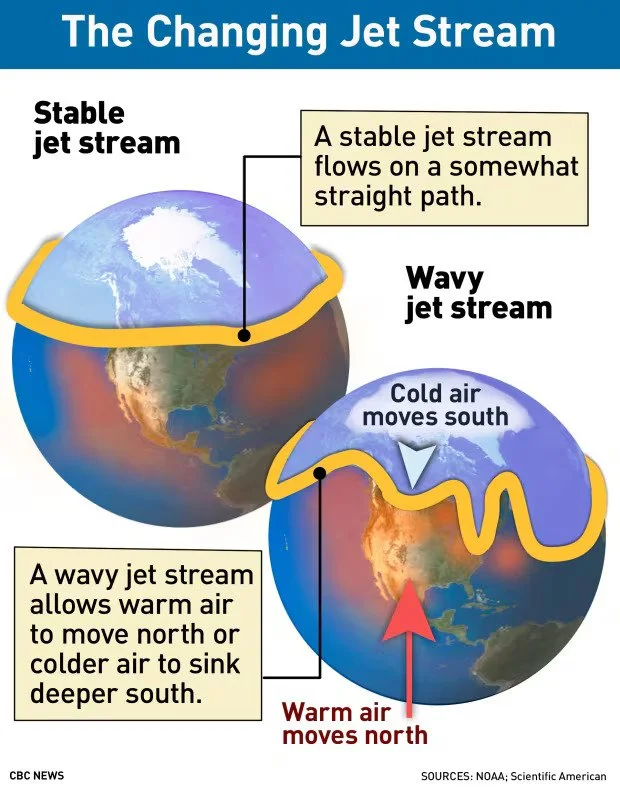

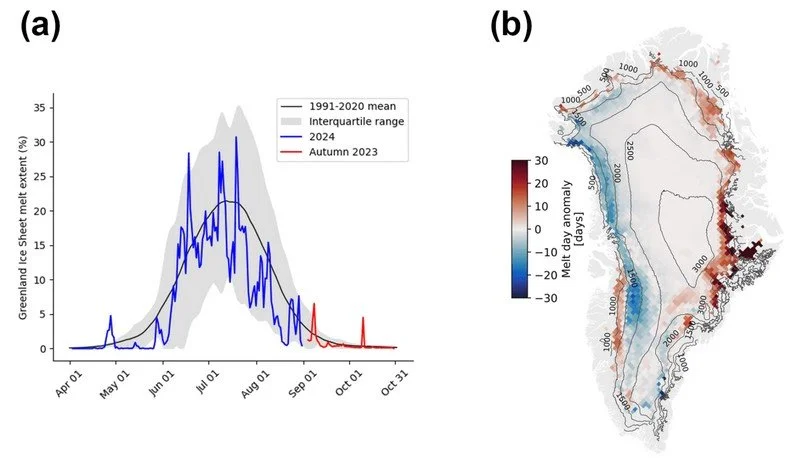

The third paper, published just two months ago in December 2025, attributes the inability of climate models to correctly predict Southern Ocean cooling on the models’ underestimation of Southern Ocean storm strength and thus the heat transfer from atmosphere to ocean. Storminess draws colder deepwater upward, keeping the surface cooler which enables the surface to take up more atmospheric heat.

The Swedish, South African and UK researchers employed a robotic “glider” to measure ocean temperature and salinity, together with atmospheric properties above the waves. The robotic observations were then combined with satellite and multi-year climate model data.

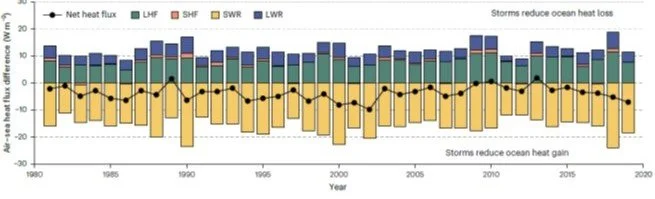

Their results are summarized in the figure below, showing the difference in atmosphere-ocean heat flux between the interior of summer storms and the whole ice-free Southern Ocean. LHF (latent heat flux), SHF (sensible heat flux) and LWR (longwave radiation) all contribute to ocean heat loss; SWR (shortwave radiation) results in ocean heat gain. The black line with dots indicates the net heat flux, which is seen to produce slight cooling overall – though less cooling than from weaker storms.

Each of these different explanations accounts partially for underestimated Southern Ocean cooling in climate models, so perhaps a combination of all three will resolve the discrepancy.

Next: Challenges to the CO2 Global Warming Hypothesis: (13) Global Warming Entirely from Declining Planetary Albedo