No Convincing Evidence That Extreme Wildfires Are Increasing

/According to a new research study by scientists at the University of Tasmania, the frequency and magnitude of extreme wildfires around the globe more than doubled between 2003 and 2023, despite a decline in the total worldwide area burned annually. The study authors link this trend to climate change.

Such a claim doesn’t stand up to scrutiny, however. First, the authors seem unaware of the usual definition of climate change, which is a long-term shift in weather patterns over a period of at least 30 years. Their finding of a 21-year trend in extreme wildfires is certainly valid, but the study interval is too short to draw any conclusions about climate.

Paradoxically, the researchers mention an earlier 2017 study of theirs, stating that the 12-year period of that study of extreme wildfires was indeed too short to identify any temporal climate trend. Why they think 21 years is any better is puzzling!

Second, the study makes no attempt to compare wildfire frequency and magnitude over the last 21 years with those from decades ago, when there were arguably as many hot-burning fires as now. Such a comparison would allow the claim of more frequent extreme wildfires today to be properly evaluated.

Although today’s satellite observations of wildfire intensity far outnumber the observations made before the satellite era, there’s still plenty of old data that could be analyzed. Satellites measure what is called the FRP (fire radiative power), which is the total fire radiative energy less the energy dissipated through convection and conduction. The older FI (fire intensity) also measures the energy released by a fire, and is the rate of energy released per unit time per unit length of fire front; FRP, usually measured in MW (megawatts), is obviously related to FI.

The study authors define extreme wildfires as those with daily FRPs exceeding the 99.99th percentile. Satellite FRP data for all fires in the study period was collected in pixels 1 km on a side, each retained pixel containing just one wildfire “hotspot” after duplicate hotspots were excluded.

The total raw dataset included 88.4 million hotspot observations, and this number was reduced to 30.7 million “events” by summing individual pixels in cells approximately 22 x 22 km on a side. Of this 30.7 million, just 2,913 events satisfied the extreme wildfire 99.99th percentile requirement. The average of the study’s summed FRP values for the top 20 events was in the range of 50,000-150,000 MW, corresponding to individual FRPs of about 100-300 MW in a 1 x 1 km pixel.

A glance at the massive datatset shows individual FRP values ranging from the single digits to several hundred MW. If the 20 hottest wildfires during 2003-23 had FRPs above 100 MW, most of the other 2,893 fires above the 99.99th percentile would have had lower FRPs, in the tens and teens.

While intensity data for historical wildfires is sparse, there are occasionally numbers mentioned in the literature. One example can be found in a 2021 paper that reviews past large-area high-intensity wildfires that have occurred in arid Australian grasslands. The paper’s authors state that:

Contemporary fire cycles in these grasslands (spinifex) are characterized by periodic wildfires that are large in scale, high in intensity (e.g., up to c. 14,000 kW) … and driven by fuel accumulations that occur following exceptionally high rainfall years.

An FRP of 14,000 kW, or 14 MW, is comparable to that of many of the 2,893 FRPs for modern extreme wildfires (excluding the top 20) in the Tasmanian study. The figure below shows the potential fire intensity of bushfires across Australia, the various colors indicating the FI range. As you can see, the most intense bushfires occur in the southeast and southwest of the country; FI values in those regions can exceed 100 MW per meter, which correspond to FRPs of about 30 MW.

And, although it doesn’t cite FI numbers, a 1976 paper on Australian bushfires from 1945 to 1975 makes the statement that:

The fire control authorities recognise that no fire suppression system has been developed in the world which can halt the forward spread of a high-intensity fire burning in continuous heavy fuels under the influence of extreme fire weather.

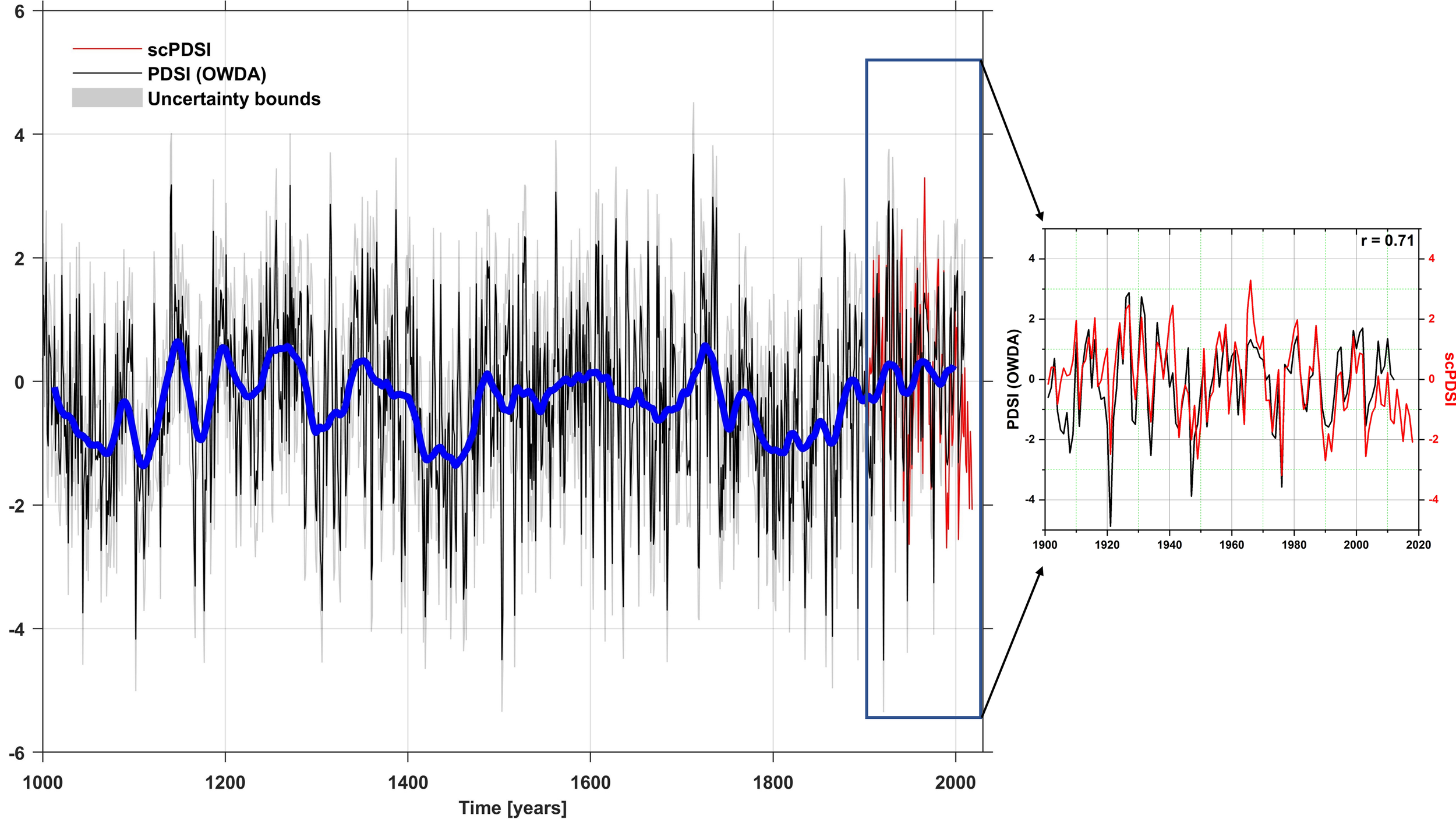

High- and extremely high-intensity wildfires in Australia at least are nothing new, and the same is no doubt true for other countries included in the Tasmanian study. The study authors remark correctly that higher temperatures due to global warming and the associated drying out of vegetation and forests both increase wildfire intensity. But there have been equally hot and dry periods in the past, such as the 1930s, when larger areas burned.

So there’s nothing remarkable about the present study. Even though it’s difficult to find good wildfire data in the pre-satellite era, the study authors could easily extend their work back to the onset of satellite measurements in the 1970s.

Next: The Scientific Reality of the Quest for Net Zero