The Sun Can Explain 70% or More of Global Warming, Says New Study

/Few people realize that the popular narrative of overwhelmingly human-caused global warming, with essentially no contribution from the sun, hinges on a satellite dataset showing that the sun’s output of heat and light has decreased since the 1950s.

But if a different but plausible dataset is substituted, say the authors of a new study, the tables are turned and a staggering 70% to 87% of global warming since 1850 can be explained by solar variability. The 37 authors constitute a large international team of scientists, headed by U.S. astrophysicist Willie Soon, from many countries around the world.

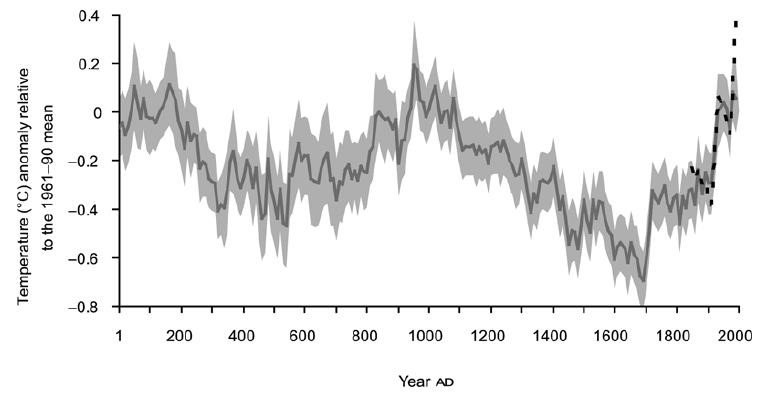

The two rival datasets, each of which implies a different trend in solar output or TSI (total solar irradiance) since the late 1970s when satellite measurements began, are illustrated in the figure below, which includes pre-satellite proxy data back to 1850. The TSI and associated radiative forcing – the difference in the earth’s incoming and outgoing radiation, a difference which produces heating or cooling – are measured in units of watts per square meter, relative to the mean from 1901 to 2000.

The upper graph (Solar #1) is the TSI dataset underlying the narrative that climate change comes largely from human emissions of greenhouse gases, and was used by the IPCC (Intergovernmental Panel on Climate Change) in its 2021 AR6 (Sixth Assessment Report). The lower graph (Solar #2) is a TSI dataset from a different satellite series, as explained in a previous post, and exhibits a more complicated trend since 1950 than Solar #1.

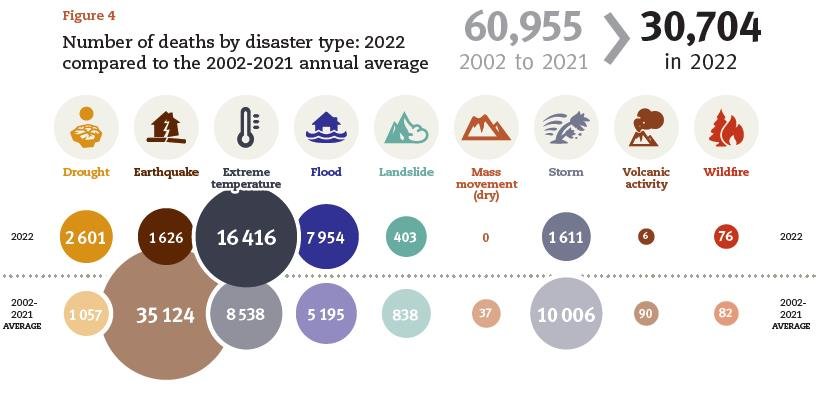

To identify the drivers of global warming since 1850, the study authors carried out a statistical analysis of observed Northern Hemisphere land surface temperatures from 1850 to 2018; the temperature record is shown as the black line in the next figure. Following the approach of the IPCC’s AR6, three possible drivers were considered: two natural forcings (solar and volcanic) and a composite of multiple human-caused or anthropogenic forcings (which include greenhouse gases and aerosols), as employed in AR6.

Time series for the different forcings, or a combination of them, were fitted to the temperature record utilizing multiple linear regression. This differs slightly from the IPCC’s method, which used climate model hindcasts based on the forcing time series as an intermediate step, as well as fitting global land and ocean, rather than Northern Hemisphere land-only, temperatures.

The figure below shows the new study’s best fits to the Northern Hemisphere land temperature record for four scenarios using a combination of solar, volcanic and anthropogenic forcings. Scenarios 1 and 2 correspond to the Solar #1 and Solar #2 TSI time series depicted in the first figure above, respectively, combined with volcanic and anthropogenic time series. Scenarios 3 and 4 are the same without the anthropogenic component – that is, with natural forcings only. Any volcanic contribution to natural forcing usually has a cooling effect and is short in duration.

The researchers’ analysis reveals that if the Solar #1 TSI time series is valid, as assumed by the IPCC in AR6, then natural (solar and volcanic) forcings can explain at most only 21% of the observed warming from 1850 to 2018 (Scenario 3). In this picture, adding anthropogenic forcing brings that number up to an 87% fit (Scenario 1).

However, when the Solar #1 series is replaced with the Solar #2 series, then the natural contribution to overall warming increases from 21% to a massive 70% (Scenario 4), while the combined natural and anthropogenic forcing number rises from an 87% to 92% fit (Scenario 2). The better fits with the Solar #2 TSI time series compared to the Solar #1 series are visible if you look closely at the plots in the figure above.

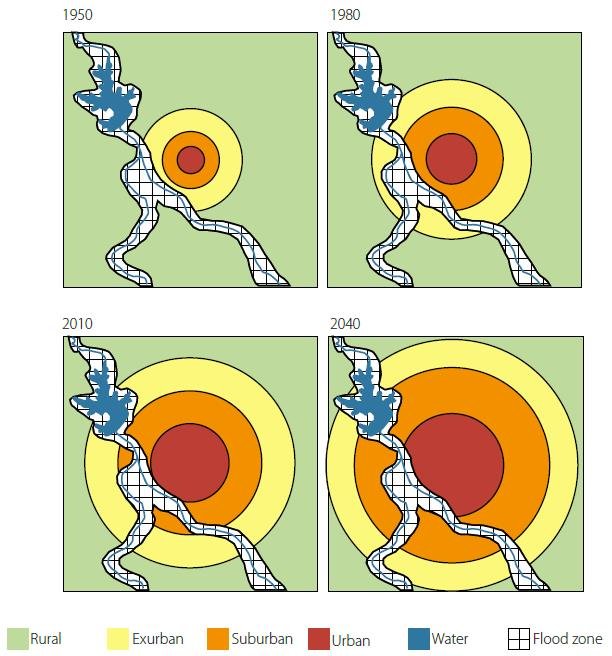

These findings are enhanced further if urban temperatures are excluded from the temperature dataset, on the grounds that urbanization biases temperature measurements upward. The authors have also found that the long-term warming rate for rural temperature stations is only 0.55 degrees Celsius (0.99 degrees Fahrenheit) per century, compared with a rate of 0.89 degrees Celsius (1.6 degrees Fahrenheit) per century for rural and urban stations combined, as illustrated in the figure below.

Fitting the various forcing time series to a temperature record based on rural stations alone, the natural contribution to global warming rises from 70% to 87% when the Solar #2 series is used.

If the Solar #2 TSI time series represents reality better than the Solar #1 series used by the IPCC, this means that between 70% and 87% of global warming is mostly natural and the human-caused contribution is less than 30% – the complete opposite to the IPCC’s claim of largely anthropogenic warming.

Unsurprisingly, such an upstart conclusion has raised some hackles in the climate science community. But the three lead authors of the study have effectively countered their critics in lengthy, detailed rebuttals (here and here).

The study authors do point out that “it is still unclear which (if any) of the many TSI time series in the literature are accurate estimates of past TSI,” and say that we cannot be certain yet whether the warming since 1850 is mostly human-caused, mostly natural, or some combination of both. In another paper they remark that, while three of 27 or more different TSI time series can explain up to 99% of the warming, another seven time series cannot account for more than 3%.

Next: Challenges to the CO2 Global Warming Hypothesis: (9) Rotation of the Earth’s Core as the Source of Global Warming