Nitrous Oxide No More a Threat for Global Warming than Methane

/Nitrous oxide (N2O), a minor greenhouse gas, has recently come under increasing scrutiny for its supposed global warming potency. But, just as with methane (CH4, concerns over N2O emissions stem from a basic misunderstanding of the science. As I discussed in a previous post, CH4 contributes only one tenth as much to global warming as carbon dioxide (CO2). N2O contributes even less.

The misunderstanding has been elucidated in a recent preprint by a group of scientists including atmospheric physicists William Happer and William van Wijngaarden, who together wrote an earlier paper on CH4. The new paper compares the radiative forcings – disturbances that alter the earth’s climate – of N2O and CH4 to that of CO2.

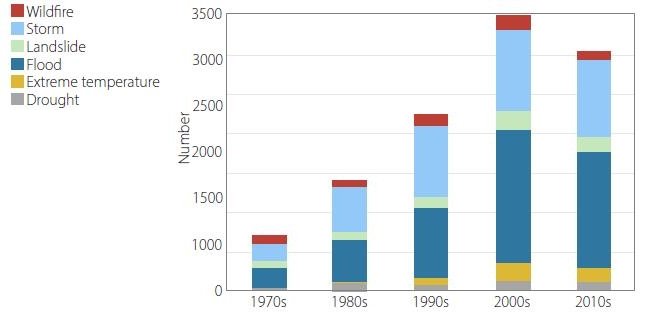

The largest source of N2O emissions is agriculture, particularly the application of nitrogenous fertilizers to boost crop production, together with cow manure management. As the world’s population continues to grow, so does the use of fertilizers in soil and the head count of cows. Agriculture accounts for approximately 75% of all N2O emissions in the U.S., emissions which comprise about 7% of the country’s total greenhouse gas emissions from human activities.

But the same hype surrounding the contribution of CH4 to climate change extends to N2O as well. The U.S. EPA (Environmental Protection Agency)’s website, among many others, claims that the impact of N2O on global warming is a massive 300 times that of CO2 – surpassing even that of CH4 at supposedly 25 times CO2. Happer, van Wijngaarden and their coauthors, however, show that the actual contribution of N2O is tiny, comparable to that of CH4.

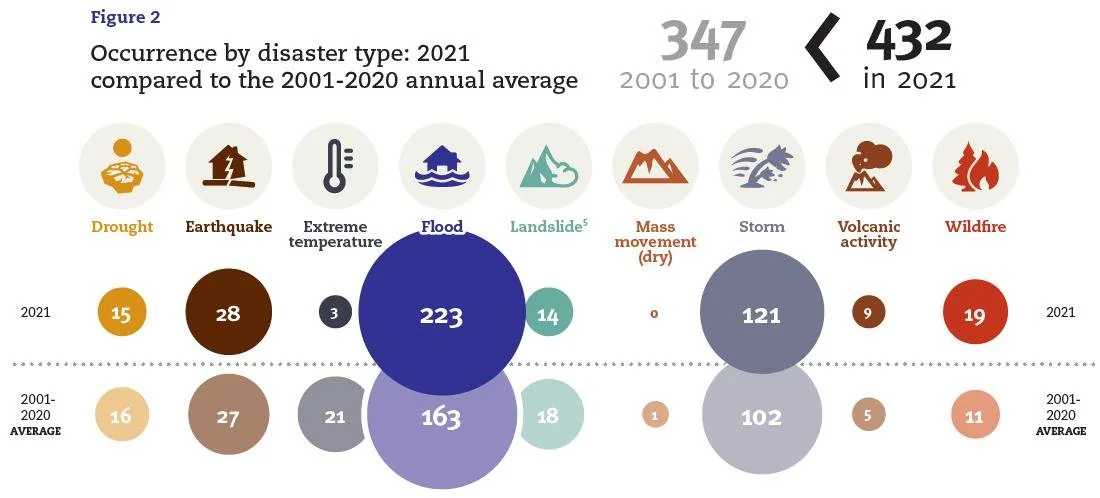

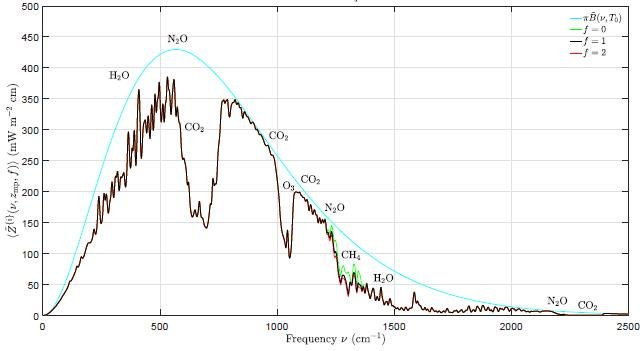

The authors have calculated the spectrum of cooling outgoing radiation for several greenhouse gases at the top of the atmosphere. A calculated spectrum emphasizing N2O is shown in the figure below, as a function of wavenumber or spatial frequency. The dark blue curve is the spectrum for an atmosphere with no greenhouse gases at all, while the black curve is the spectrum including all greenhouse gases. Removing the N2O results in the green curve; the red curve, barely distinguishable from the black curve, represents a doubling of the present N2O concentration.

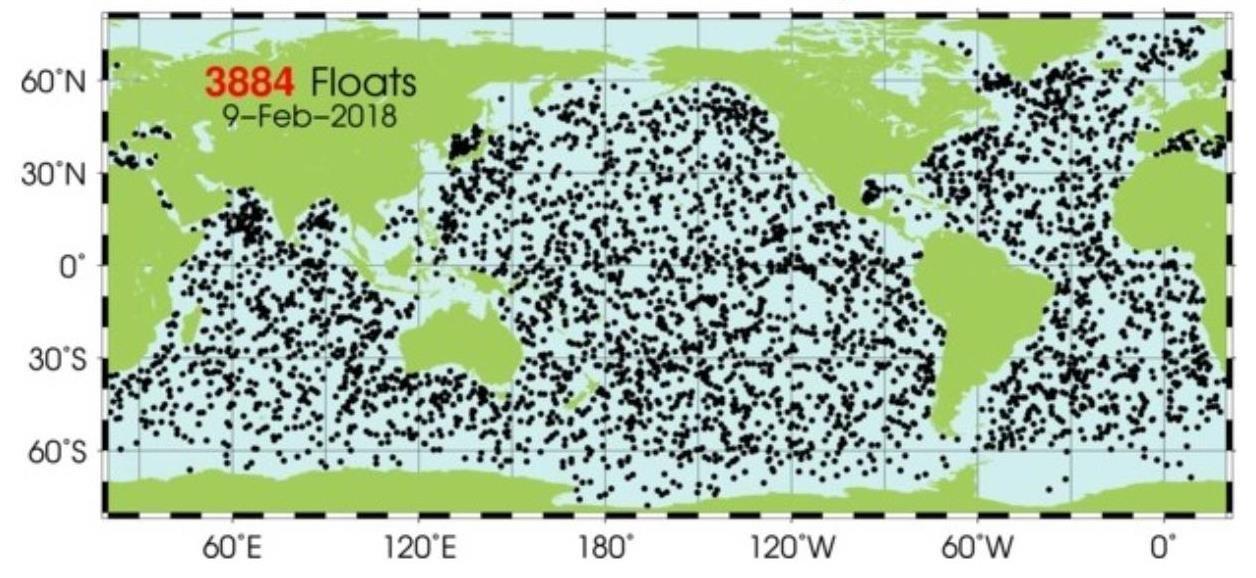

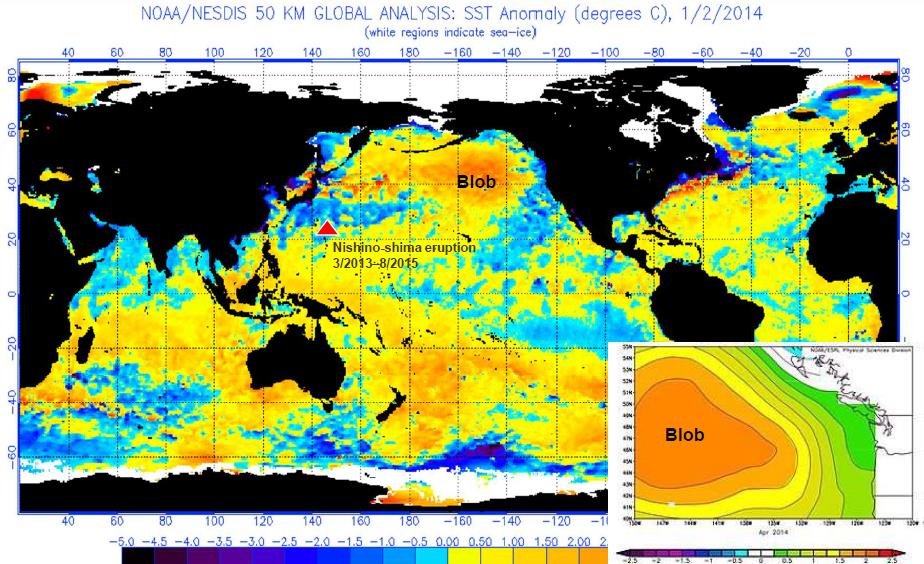

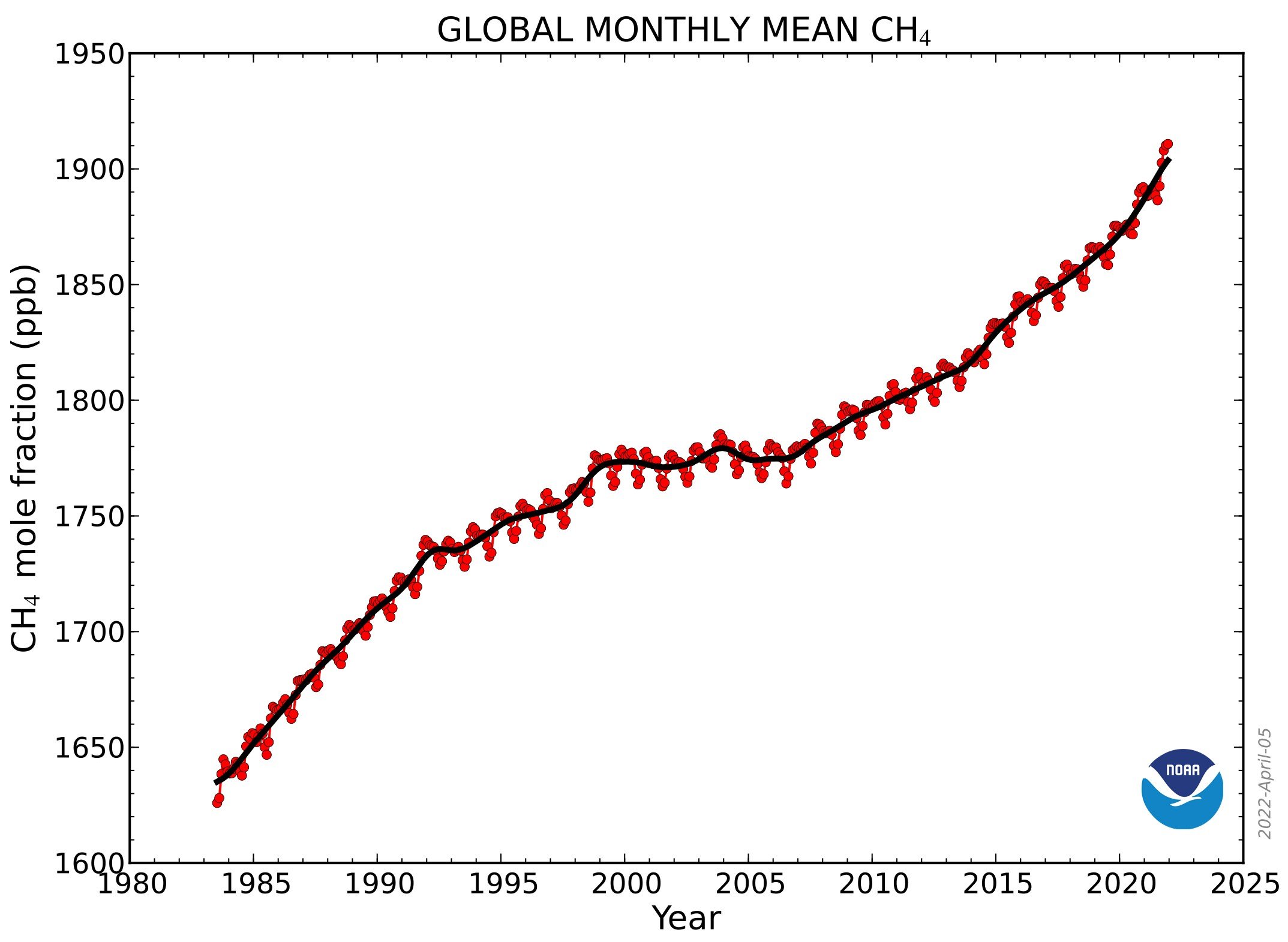

The yearly abundance of N2O in the atmosphere since 1977, as measured by NOAA (the U.S. National Oceanic and Atmospheric Administration), is depicted in the adjacent figure. Currently, the N2O concentration is about 0.34 ppm (340 ppb), three orders of magnitude lower than the CO2 level of approximately 415 ppm, and increasing much more slowly – at a rate of 0.85 ppb per year since 1985, 3000 times smaller than the rate of increase of CO2.

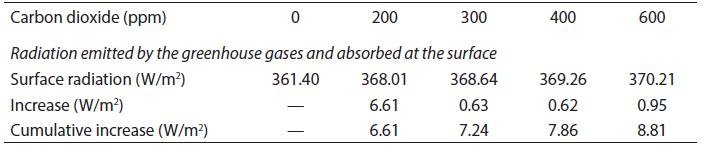

At current atmospheric concentrations of N2O and CO2, the radiative forcing for each additional molecule of N2O is about 230 times larger than that for each additional molecule of CO2. Importantly, however, because the rate of increase in the N2O level is 3000 times smaller, the contribution of N2O to the annual increase in forcing is only 230/3000 or about one thirteenth that of CO2. For comparison, the contribution of CH4 is about one tenth the CO2 contribution.

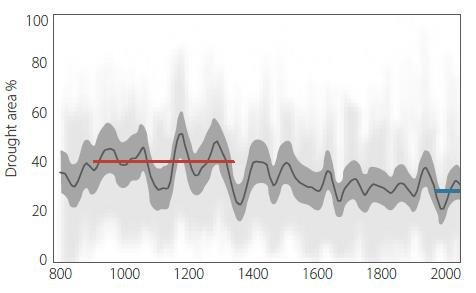

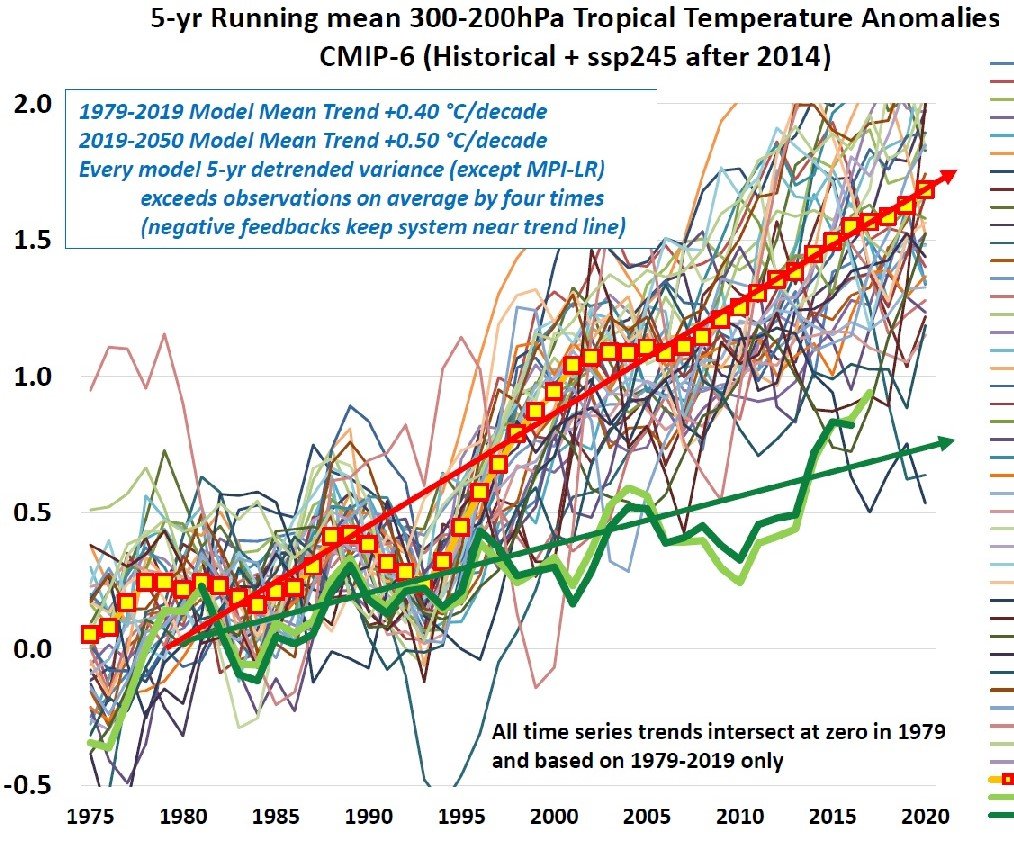

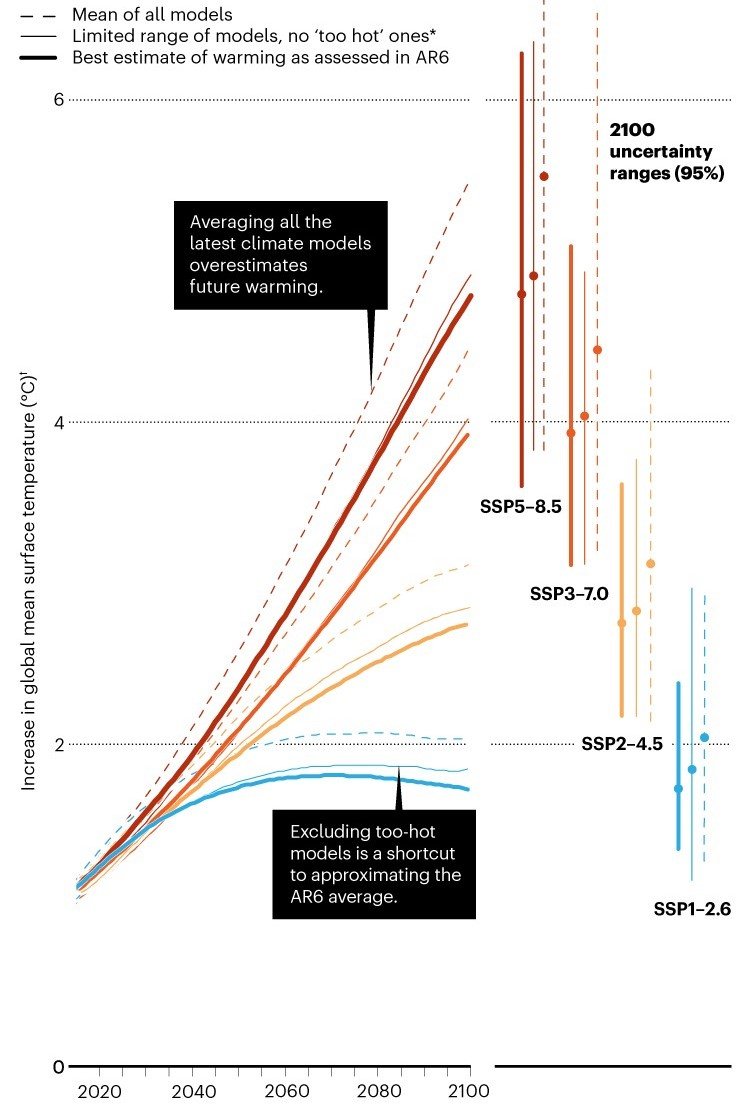

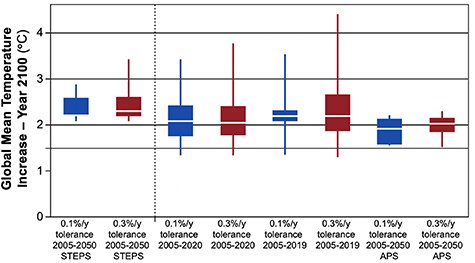

The relative contributions to future forcing of N2O, CH4 and CO2 can be seen in the next figure, showing the research authors’ evaluation of expected forcings at the top of the troposphere over the next 50 years; the forcings are increments relative to today, measured in watts per square meter. The horizontal lines are the projected temperatures increases (ΔT) corresponding to particular values of the forcing increase.

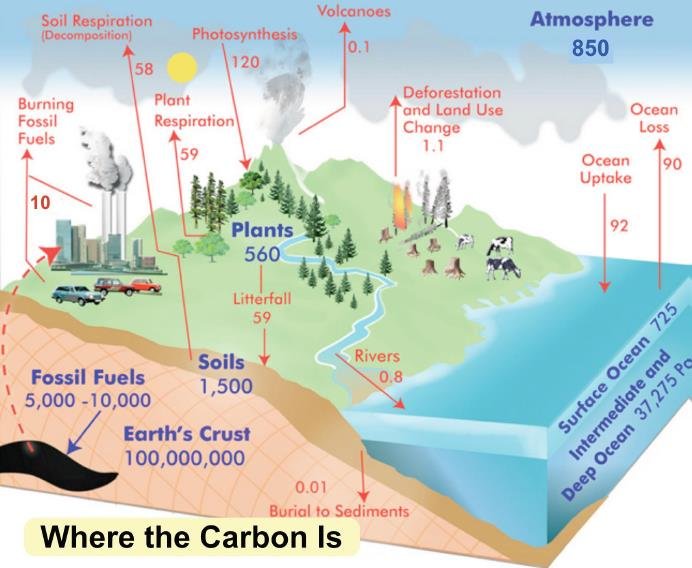

Atmospheric N2O dissociates into nitrogen (N2), the most abundant gas in the atmosphere. N2 is “fixed” by microrganisms in soils and the oceans as ammonium ions, which are then converted to inorganic nitric oxide ions (NO3-) and various compounds. These in turn are incorporated into organic molecules such as amino acids and other nitrogen-containing molecules essential for life, like DNA (deoxyribonucleic acid). Nitrogen is the third most important requirement for plant growth, after water and CO2.

Greatly increased use of nitrogen fertilizers is the main reason for massive increases in crop yields since 1961, part of the so-called green revolution in agriculture. The following figure shows U.S. crop yields relative to yields in 1866 for corn, wheat, barley, grass hay, oats and rye. The blue dashed curve is the annual agricultural usage of nitrogen fertilizer in megatonnes (Tg). The strong correlation with crop yields is obvious.

While most soil nitrogen is eventually returned to the atmosphere as N2 molecules, some of the slow increase in the atmospheric N2O level seen in the second figure above may be due to nitrogen fertilizer usage. But the impact of nitrogen fertilizer and natural nitrogen fixation on the nitrogen cycle is not yet clear and more research is needed.

Nonetheless, proposed cutbacks in fertilizer use will drastically reduce agricultural yields around the world, for the sake of only a tiny reduction in global warming potential.

Next: Science on the Attack: The James Webb Telescope and Mysteries of the Universe